Artificial intelligence (AI) tools have been increasing in popularity, especially with the launch of Chat GPT in 2022. These tools have potential to shape the business environment, but they also introduce new cybersecurity risks that business owners should be aware of. In this article, we will discuss 3 AI cybersecurity risks to be on the lookout for in 2023.

What is Artificial Intelligence (AI)?

Before we can understand the cybersecurity risks AI may pose, it’s important to understand what it is. Well, here’s how Chat GPT defines artificial intelligence:

AI Cybersecurity Risks

AI tools are being used across many industries–including healthcare, cybersecurity, finance, retail, manufacturing, and marketing. The tools that have been developed can efficiently solve problems and make decisions, but they can also pose a number of cybersecurity hazards to businesses. Remember, legitimate businesses aren’t the only ones with access to AI technologies. Scammers have access to them as well.

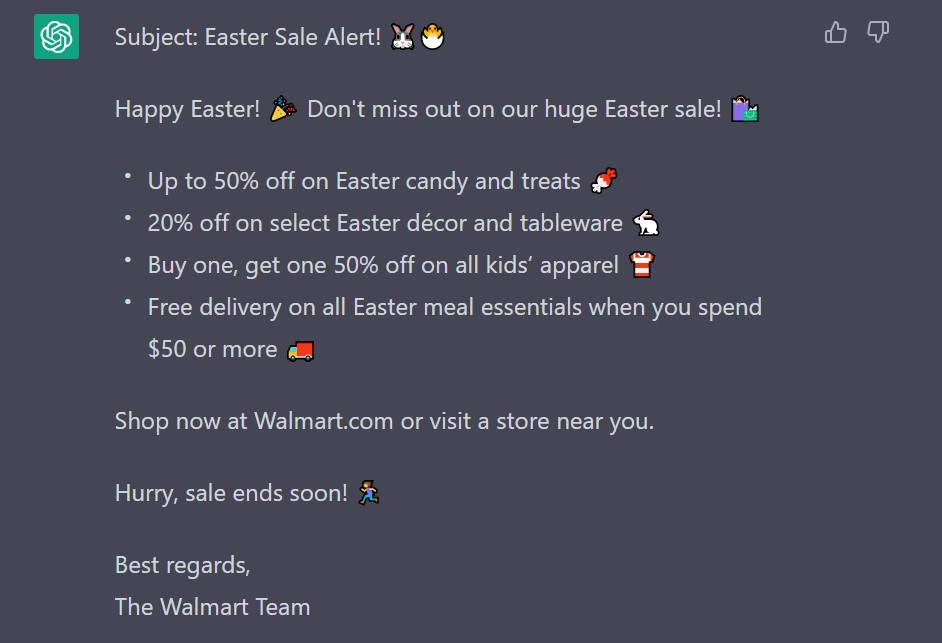

1. Chat GPT may lead to more convincing phishing attacks.

We’ve all received the email in broken English asking us to click a link to a sketchy URL. But with the increasing ubiquity of tools like Chat GPT, scammers may start utilizing these tools to craft convincing phishing messages.

I asked Chat GPT to write a marketing email for Walmart. The result was a whole lot more convincing than a lot of phishing emails I get in my inbox. Thrown into a nice email template, it could look like an official Walmart email.

Sometimes phishing messages can be obvious. But if you received a nicely formatted marketing email like this, you probably wouldn’t be too suspicious. AI tools can be used by scammers to create more convincing and targeted phishing attacks that are difficult to detect.

Preventing Phishing Attacks

Here are a few ways you can prevent falling for a phishing attack. To learn more about phishing, check out our 2020 newsletter on how to avoid common phishing tactics.

- Check the sender’s address. Before clicking on a link in an email, check the sender’s address to confirm it is an official company email, or the same email address as past correspondence with someone.

- Check the destination URL. I’ve gotten some pretty convincing emails that seemed to come from an official domain, but the destination URL in the link was malicious. Before clicking on a link in an email, double check that there aren’t any typos or misspellings in the destination URL.

- Uncertain? Don’t click! If you aren’t sure whether an email is legitimate or not, it’s a good idea to just not click the link. Reach out independently to the sender to verify that the email was from them. Don’t risk a cyberattack because it’s more convenient to cut a corner!

2. Cybercriminals can hack into your AI tools.

Imagine if hackers could literally use your business systems to hack you. With the democratization of AI, that’s becoming more common. Cybercriminals could manipulate the input data fed into an AI system, or target the infrastructure supporting the AI system, such as the servers or the networks. They can exploit vulnerabilities in the software or hardware to gain unauthorized access, install malware or ransomware, or steal data.

3. Insiders could access or compromise private data.

Insider threats are security risks posed by employees who have authorized access to an organization’s resources. These employees could intentionally or unintentionally misuse the resources, leading to security breaches, data theft, or other security incidents. Insider threats are particularly concerning when it comes to AI cybersecurity because of the sensitive nature of the data involved.

AI systems often collect and process vast amounts of data, including personal and confidential information. This data is often the lifeblood of a business and needs to be protected at all costs.

Securing Your AI Infrastructure

Here are five recommendations for securing your AI infrastructure.

- Keep your software updated. This includes not only the AI system itself but also any supporting infrastructure, such as servers, databases, and network devices. We have an article on that topic of End Of Life and Old Software and you can click here to view it.

- Implement access controls. Limit access to the AI system to only those who need it. Use strong authentication methods such as multi-factor authentication, and enforce strict password policies.

- Use encryption. Use encryption to protect sensitive data both in transit and at rest. This includes encrypting data stored on servers, as well as data transmitted over the network.

- Conduct regular security assessments. Regularly assess the security of the AI system and supporting infrastructure. This includes vulnerability scans and penetration testing to identify potential weaknesses that could be exploited by cybercriminals.

Train employees. Train employees on how to identify and avoid social engineering attacks, such as phishing scams. Educate employees on the importance of following cybersecurity best practices and reporting any suspicious activity.

The Coverage You Need

Chat GPT says it best:

Cyber risks are on the rise,

And it’s essential to be wise,

Choose Veritas Insurance for your cyber defense,

And protect your business from any cyber offense.

Let's Get Social!

For more information on Cybersecurity and Cyber Liability check out our other articles here.

Complete this form and we will send you the following:

As an added bonus, we will also send you with no obligation:

3. Our Cyber Risk Assessment of your company- by giving us just your domain name, we will run multiple different scans including a dark web check, and reach out with your customized report.

Mastering Medical Payments Under Commercial Auto Policy

Simplifying Medical Payments Under Commercial Auto Policy For businesses that rely on vehicles, commercial auto insurance is a must-have. It covers liability, property damage, and vehicle harm. However, there’s

Understanding MedPay for Roofers

Medical Payments Insurance Coverage for Roofers For roofers, mastering MedPay insurance is a game-changer. Imagine working high above the ground, knowing that any unforeseen medical bills are covered, letting